- Trump Condemns State AI Laws Amid Regulatory Uncertainty

- Colorado’s Ambitious, Risk-Based AI Law

- Federal Backdrop: Trump’s Opposition to State AI Rules

- How Colorado Compares: EU AI Act and California Privacy

- What Tech Leaders Should Do Now

- Industry & Market Roundup

- Nvidia earnings temper bubble fears—for now

- Forbes CIO Summit spotlights transformation leaders

- Google unveils Gemini 3

- Key Takeaways for Tech Leaders

Trump Criticizes State AI Laws: What Tech Leaders Should Know

Trump Condemns State AI Laws Amid Regulatory Uncertainty

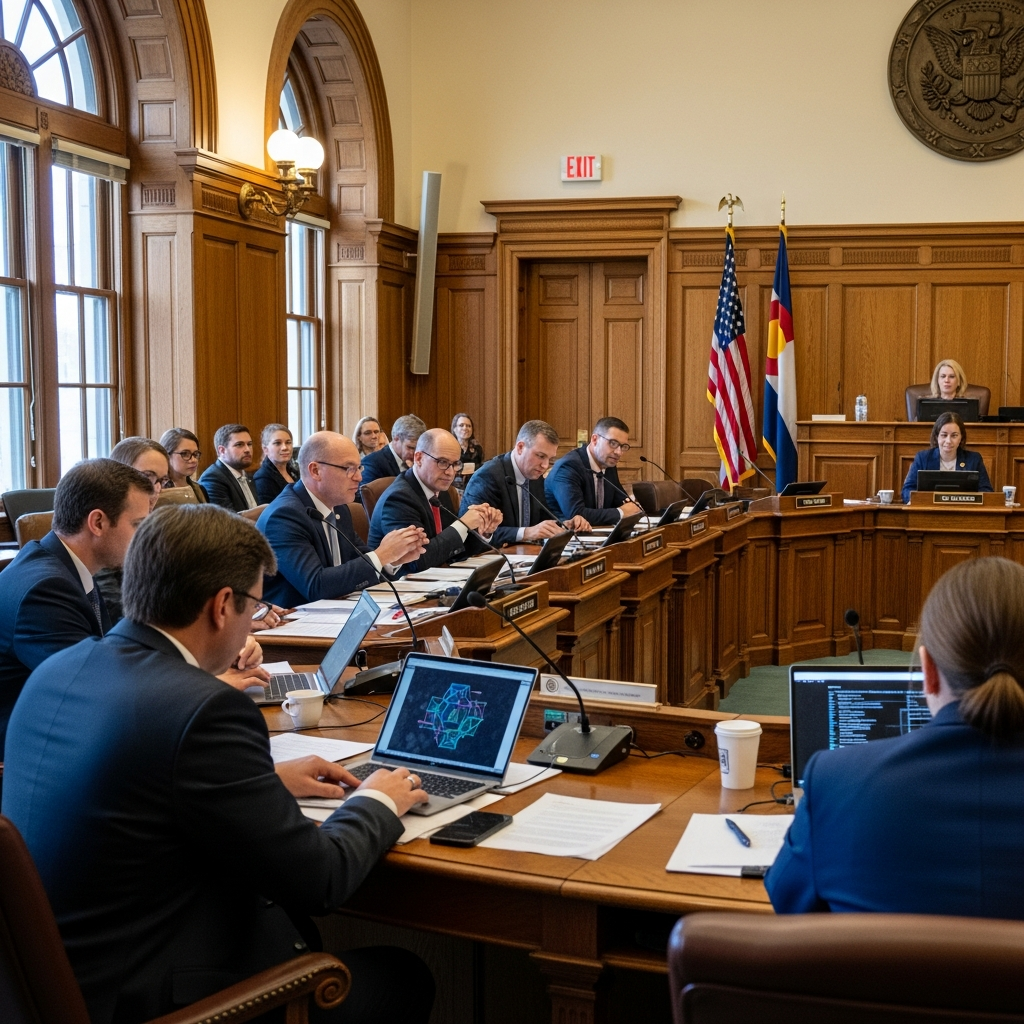

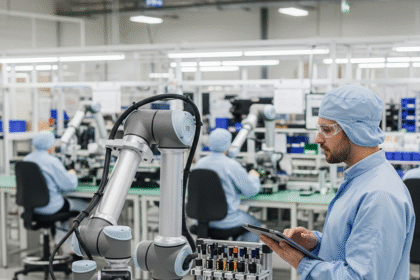

As the federal government delays comprehensive artificial intelligence (AI) legislation, states are stepping in—creating a patchwork of rules that’s sparking debate among policymakers, business leaders, and former President Donald Trump. Leading the way, Colorado and other states have enacted laws aimed at governing AI’s use in sensitive areas like employment, education, housing, healthcare, and credit, while seeking to safeguard personal data and reduce discrimination risks.

Colorado’s Ambitious, Risk-Based AI Law

Colorado’s forward-looking law—signed by Governor Jared Polis in 2024—targets high-risk AI applications and sets a precedent other states are watching closely. Although implementation has been postponed until June 2026 after significant legislative debate, the law underscores both the urgency and the complexity of regulating emerging technology.

Even as the state pushes toward clearer guardrails, Polis and others have flagged ambiguities and potential innovation headwinds, highlighting the balancing act of encouraging AI development while mitigating harm.

Who’s in scope

The law applies broadly to companies operating in or offering services to users in Colorado—regardless of physical location—when AI is used in contexts with significant legal or economic impacts. Everyday, low-risk tools (e.g., basic chatbots) are not the primary focus.

Tyler Thompson, partner at Reed Smith, notes that Colorado’s approach emphasizes transparency and risk assessments for impactful AI use, aligning with global frameworks like the EU AI Act as well as ISO and NIST guidance—steps many companies view as “future proof.”

Federal Backdrop: Trump’s Opposition to State AI Rules

Federal uncertainty continues to loom. At this week’s U.S.-Saudi Investment Forum, Trump criticized state-level AI regulations as a “disaster,” warning against potentially “woke” policies. Earlier in the year, a proposal to ban state and local AI rules for a decade was considered for a major federal bill but ultimately removed. Lawmakers are now weighing standalone legislation or folding measures into defense policy bills.

Media reports indicate Trump has drafted an executive order instructing the Attorney General to sue states that enact their own AI rules, though practical and constitutional limits may constrain presidential authority in this area.

How Colorado Compares: EU AI Act and California Privacy

Compared to the EU AI Act, Colorado’s law is more streamlined but similarly emphasizes risk-based controls, transparency, and accountability. California, meanwhile, has expanded its privacy regime in ways that indirectly regulate AI, though many provisions primarily affect larger enterprises processing substantial volumes of personal data.

What Tech Leaders Should Do Now

Businesses are already moving toward adaptable, cross-jurisdictional AI governance. Focus on practical steps that deliver value regardless of how the rules evolve:

- Inventory AI use cases: Map systems, models, and vendors; flag “high-risk” applications tied to employment, credit, housing, education, or healthcare.

- Risk assessments: Evaluate intended use, training data, performance, fairness, privacy, security, and impacts on protected classes.

- Transparency & disclosures: Provide user-facing notices where decisions materially affect individuals; document model limitations and human oversight.

- Governance & accountability: Establish an AI policy, RACI roles, approval gates, incident response, and model lifecycle controls (from design to decommissioning).

- Vendor management: Update contracts and DPAs; require model documentation, testing results, and change notifications.

- Testing & monitoring: Adopt pre-deployment validation and continuous monitoring for bias, drift, robustness, and security.

- Human-in-the-loop: Define when and how human review overrides automated decisions; maintain adjudication records.

- Documentation readiness: Keep artifacts aligned to ISO/NIST practices for easy adaptation to Colorado, EU, or future federal requirements.

- Training & culture: Offer targeted training for engineers, product, and compliance teams; set clear escalation paths.

Timeline and Watchpoints

- Colorado: Effective date postponed to June 2026—expect clarifying guidance and potential amendments.

- Federal: Monitor potential preemption efforts in standalone bills or defense packages; watch for executive actions and litigation.

- States: Track copycat or alternative frameworks as legislatures reconvene; anticipate sector-specific add-ons.

- Standards: Continue aligning with EU AI Act risk management, ISO, and NIST guidance for “future proof” compliance.

Industry & Market Roundup

Nvidia earnings temper bubble fears—for now

Nvidia posted better-than-expected results, with revenue reaching $57 billion and continued strength in data center demand. Markets rallied briefly, though concerns about stretched valuations remain.

Forbes CIO Summit spotlights transformation leaders

At its CIO Summit in New York, Forbes celebrated innovation and released its CIO Next List, highlighting leaders steering digital transformation across fast casual dining, fashion, payments, and information services.

Google unveils Gemini 3

Google launched Gemini 3, an advanced AI model featuring improved reasoning and interactive UI capabilities. Integration across Google products has contributed to a surge in cofounder Larry Page’s net worth.

Key Takeaways for Tech Leaders

- Expect continued federal uncertainty and potential preemption bids; prepare for litigation and state-by-state divergence.

- Colorado’s risk-based framework is influential—even with a 2026 start date—and aligns with global best practices.

- “Future proof” your program with transparent policies, risk assessments, and strong lifecycle governance.

- Track high-risk use cases closely and document decisions to adapt quickly as rules crystallize.

As the AI policy debate intensifies, building adaptable compliance frameworks and deepening visibility into organizational AI use will position companies to navigate shifting state and federal requirements with confidence.