Meta signs $14.2B AI compute deal with CoreWeave

Meta taps CoreWeave in $14.2B AI compute pact

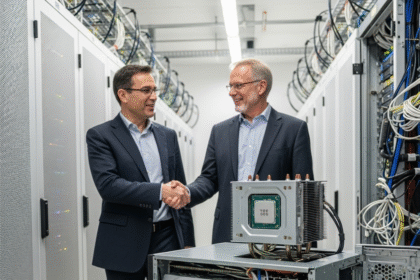

CoreWeave has secured a multiyear, $14.2 billion agreement to provide Meta Platforms with cloud-based AI compute, extending into the next decade as the tech industry races to lock down processing power. The contract runs through 2031 with an option to renew for an additional year.

Key points

- Long-term pact runs through 2031, with a one-year renewal option.

- Deal reflects the scramble to secure GPUs for training and deploying generative and agentic AI.

- Analysts expect AI compute demand to stay strong into the 2030s despite bubble concerns.

Deal context and market momentum

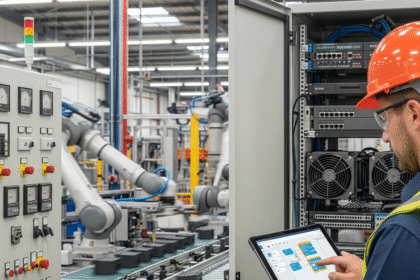

The deal arrives amid a broader surge in AI infrastructure spending. It follows reports of a far larger, $100 billion arrangement between OpenAI and Oracle to build massive data centers for generative AI, and comes alongside other headline investments, including Nvidia’s reported $100 billion commitment to OpenAI and $5 billion to U.S. chipmaker Intel. The momentum reflects escalating demand for compute to train and run generative and agentic AI models, as well as emerging use cases like humanoid robotics.

Why CoreWeave—and what it signals

Analysts say Meta’s move validates CoreWeave’s position. Nick Patience of the Futurum Group noted that Meta needs reliable access to compute and that turning to CoreWeave underscores confidence in the provider’s capacity well into 2031. CoreWeave sits among a newer cohort of AI-focused cloud compute companies—alongside Nscale, Lambda Labs, and Nebius—competing with hyperscalers such as AWS, Google, and Microsoft, which are simultaneously developing their own AI chips and infrastructure. Nvidia, which operates its own cloud platform, is also a major force in the AI compute market.

“Meta needs reliable access to compute—and turning to CoreWeave underscores confidence in its capacity well into 2031,” said Nick Patience of the Futurum Group.

What it means for Meta

CoreWeave’s customer roster has been anchored by Microsoft to date, and it also supplies OpenAI. For Meta, the agreement supports its expansive AI ambitions. The company has embedded generative AI across Facebook, Instagram, and WhatsApp and is a leading backer of open models through the Llama series.

While Meta is building proprietary AI data centers, Omdia analyst Torsten Volk observed that CoreWeave can provision GPUs at a larger scale, optimize utilization, and ensure power availability—even during peak demand—outstripping Meta’s in-house capacity. He added that Meta’s priority is advancing AI products rather than operating a GPU hyperscale cloud.

Is there a risk of overbuild?

The scale of current projects has sparked debate about whether today’s AI build-out could overshoot demand. In addition to the OpenAI–Oracle initiative, the sprawling Stargate data center effort involving OpenAI, Oracle, SoftBank, and others has fueled questions about potential overcapacity.

Patience, however, expects AI compute needs to remain strong into the 2030s. He acknowledged bubble-like elements in the market but argued it’s unlikely these facilities will be idled mid-decade, given ongoing growth in AI processing requirements.

The bottom line

By tying up long-term compute with CoreWeave, Meta signals it intends to sustain—and expand—its AI efforts for years to come. The pact also highlights how partnerships between AI developers and specialized cloud providers are becoming central to securing the GPU resources that underpin the next wave of AI applications.